The Google Search Console Coverage tab is a huge time saver in identifying which pages are appearing in Google and which are not. This component of the GSC can help you quickly identify and troubleshoot issues with your site’s content which might be keeping it out of Google.

It’s a good idea to periodically check here for any coverage issues your site is experiencing to ensure that every page which should be appearing in Google actually is.

Let’s talk about how to take the snapshot that the Google Search Console Coverage tool gives you and fix your content with it.

First, sign up for a free account and connect it to your website if you haven’t already.

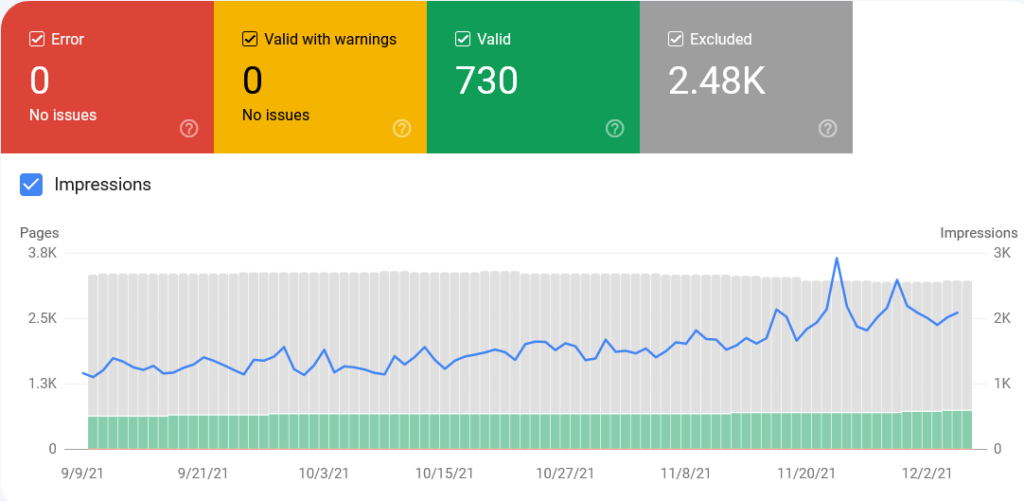

Clicking on the “Coverage” link on the left side of the dashboard brings up a snapshot like the example below:

Clicking on each of the four tabs (as well as the “Impressions” box) reflects that statistic in the graph below them.

You can see the number of URLs on your site (the total indexed and not indexed), the number of impressions you get each day over the date range.

Lastly, you can see the number of URLs in each color-coded status which we’ll breakdown now.

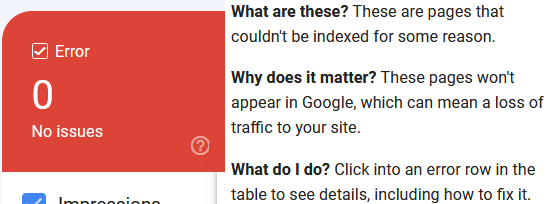

Error

This will give you a list of all of the pages on your site which Google can’t crawl due to an error.

This shouldn’t be confused with pages which you specifically set to not be indexed like administrative pages, private content pages, or non-canonical versions of pages.

Of all the pages which aren’t being indexed, it’s a good idea to prioritize these because it can reveal pages which are unresponsive on your site for various reasons.

GSC will specify the error associated with each page if you have any listed here.

You can check out a list of the coverage errors you might see here for individual pages which is keeping them from being indexed.

It’s also a good idea to start here because most of these are technical errors and will be a quick fix.

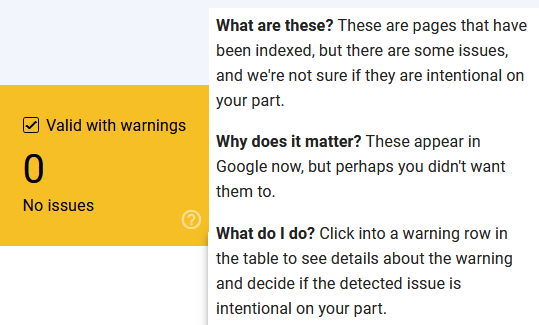

Valid With Warnings

Valid with warnings means that the page(s) listed here is in Google’s index, but there’s an issue Google wants you to know about.

A common example of an error which might appear here is when Google sees that a URL has been blocked by your robots.txt file but it indexed it anyway.

This will return the warning “Indexed, though blocked by robots.txt”.

This can happen to URLs which are being found via links from other pages on or off your site.

So while you’ve intentionally chosen to block the URL in the robots.txt file on your site, Google is still finding and indexing that URL by way of those links.

By including the URL in this “Valid With Warnings” section, Google is letting you know of a possible issue so that you can act accordingly.

You can add the noindex meta tag to the page itself or even remove the URL using the removal tool to ensure that it stays out of Google’s index.

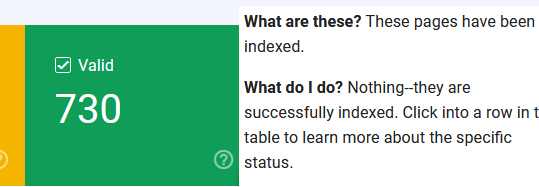

Valid

This is a list of all of the URLs which are currently indexed in Google.

Its bots have successfully found and crawled the URL and added it to Google’s index. In other words, if you search for any of the URLs listed here, they should appear in Google.

Conversely, it’s worth mentioning that if you have any specific URLs you don’t want to appear in Google, you can search for them here to ensure that they’re not indexed.

Otherwise, both you and Google have done your jobs and no additional actions are necessary on these URLs.

Excluded

The “Excluded” tab in the Google Search Console Coverage section can be the most nebulous of the bunch.

This lists all of the URLs on your site which are not currently in Google’s index.

In the domain example at the top of this page, you might have noticed that there’s roughly three times as many excluded URLs as valid, with 730 valid and 2.48k excluded.

In other words, less than 25% of the site’s content is appearing in Google. That sounds pretty bad, right?

The important thing to keep in mind is that most of the URLs appearing here are non-canonical versions of pages which are actually indexed. The bulk of these will typically be the canonical URLs with extra parameters added to them.

With that in mind, it’s normal to have a lot more excluded URLs than valid URLs listed here.

If you scroll beneath the graph, you’ll see a breakdown of the reasons these URLs have been excluded:

Clicking on any one of these blocks gives you a list of the URLs affected by the issue in question.

Most of these are self-explanatory, but let’s take a closer look at the first two which account for the lion’s share of the excluded URLs, those which are discovered and crawled but currently not indexed.

Discovered – Currently Not Indexed

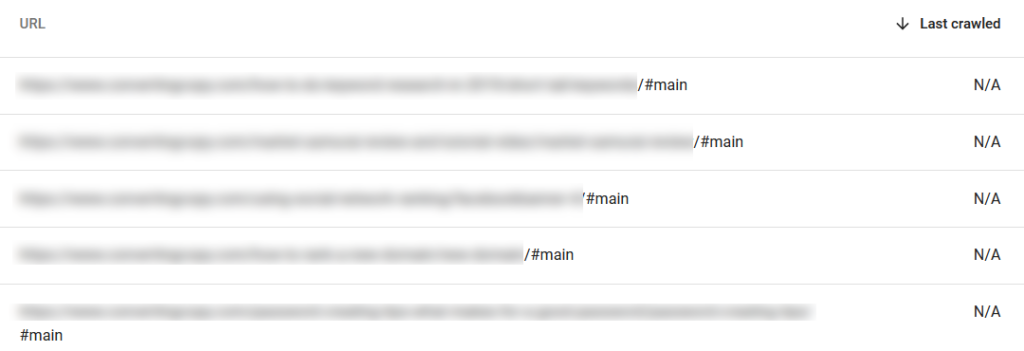

After clicking on the “discovered currently not indexed” block, I got a list of URLs which Google is aware of but hasn’t crawled:

Most of the URLs listed here (for this example site) have a “#main” extension. Visiting any of the URLs takes me to an image on that respective URL’s page.

This is a product of how the site is set up and its theme. In this case, I want to filter all instances of this example out.

To do this, click “Export” at the top of the page to export the results to a spreadsheet of your choice.

After filtering these out in Excel, I’m left with 28 entries, half of which are WordPress specific tag or category URLs.

Wanting to focus on actual pages of written content themselves, I have about a dozen URLs.

According to Google, “Discovered – currently not indexed”refers to URLs which were found but not crawled.

Typically it’s because it was going to crawl the URL but it was expected to overload the site, so the crawl was rescheduled/there is no last crawl date listed.

The URLs in this example site are years old at this point, so it’s possible they just got overlooked.

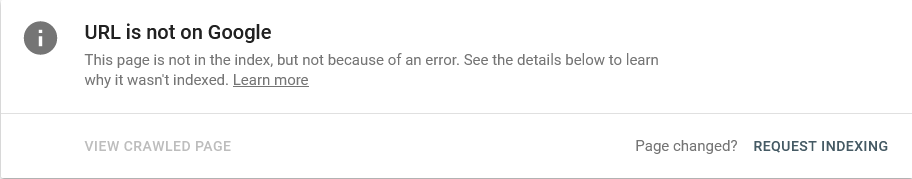

As I laid out in my overview on how to add a page to Google, you can manually request indexing by entering the URL in GSC and clicking “request indexing” like below:

Check back after a few days. If it’s still listed as “Discovered…” or has moved to the next one, it likely has to do with the content itself.

I address this in the next section: URLs which have been crawled but are still not indexed.

Crawled – Currently Not Indexed

You might actually have more results in the “crawled – currently not indexed” pile than those which it hasn’t crawled.

These are pages which Google has actively looked at on your site and decided not to add to its index.

Awkward.

I looked at the URLs listed on my test site as “crawled – currently not indexed” and most of them are similar to the “#main” issue. These are URLs which I don’t want showing in Google.

Still, there are plenty of actual articles which SHOULD at least exist in Google.

I’ve talked a bit about the reasons Google isn’t indexing a page which it has crawled before.

More often than not, if you can rule out technical errors, it comes down to weak content.

Again, awkward.

In inspecting a handful of the URLs on my example site, I can see that these are generally older, shorter posts which are thin on content in general.

There’s no additional content outside of text for many of these, so these would benefit from adding images, a video if possible, and even adding to and updating the content itself in each one.

With these updates and even specifying that you’ve updated the article within it, try resubmitting the URL to GSC.

In Summary

The “Coverage” section in Google Search Console can tell us about issues with pages on our site which are keeping them from appearing in Google.

This may be a technical error or something more subjective like weak content.

In the case of a technical error, the tool can advise you as to precisely what the issue is.

If it’s more of a content issue, you can tweak the content accordingly and resubmit. Once that page gets indexed, you can use that as a clue to get an idea of the kind of content Google believes offers value.

Either way, this tool allows you to get a good idea of which pages are in need of what kind of tune up.

You should pay attention to the “Coverage” section on a regular basis to make sure that every URL you want to appear in Google is visible.